Cooking

10 Must-Try Recipes for Busy Weeknights

Introduction. Busy weeknights call for quick, delicious, and easy-to-make meals. Whether you're a seasoned chef or a cooking novice, these 10 must-try recipes will save you time and satisfy your...

16 Jul 2025

How to Cook Delicious Meals on a Budget

Introduction to Budget-Friendly Cooking. Eating delicious meals doesn't have to mean spending a fortune. With a bit of planning and creativity, you can prepare mouth-watering dishes that are kind...

17 Jul 2025

How to Cook Delicious Meals Easily

Effortless Gourmet: Simple Steps to Cook Delicious Meals at Home. Discover the joy of cooking with these simple steps to prepare delicious meals that will impress your family and friends. Whether...

15 Jul 2025

Easy Recipes for Quick Weeknight Dinners

Simple and Delicious Weeknight Dinner Ideas for Busy Families. Finding time to prepare a nutritious and delicious dinner during the week can be a challenge for many families. With these easy...

16 Jul 2025

Communication

Secrets to Mastering Public Speaking Skills

Introduction to Mastering Public Speaking. Public speaking is an essential skill that can significantly impact your personal and professional life. Whether you're delivering a presentation at work, speaking at a conference, or giving a toast at a wedding, the ability to communicate effectively in front of an audience is invaluable. This article will explore expert tips and strategies to help you master public speaking skills and deliver your message with confidence and clarity.. Understand Your Audience. Before you step onto the stage, it's crucial to understand who your audience is. Tailoring your message to meet their interests, knowledge level, and expectations can make your speech... Read More

14 Jul 2025

Finance

Innovative Ways to Save Money Fast

Introduction. In today's fast-paced world, saving money quickly is more important than ever. Whether you're saving for a rainy day, a big purchase, or just looking to improve your financial...

15 Jul 2025

The Ultimate Guide to Personal Finance

Introduction to Personal Finance. Personal finance is a crucial aspect of everyone's life, yet many find it overwhelming. This guide is designed to simplify the complexities of managing your...

15 Jul 2025

Smart Strategies for Investing in Stocks

Introduction to Stock Market Investing. Investing in the stock market can be a lucrative way to build wealth over time. However, it requires knowledge, strategy, and patience. This guide will...

16 Jul 2025

The Beginner's Guide to Investing Wisely

Introduction to Smart Investing. Investing wisely is not just about picking the right stocks or assets; it's about understanding the fundamentals of the market, knowing your financial goals, and...

17 Jul 2025

Fitness

Effective Home Workouts with No Equipment Needed

Transform Your Body with No-Equipment Home Workouts. In today's fast-paced world, finding time to hit the gym can be challenging. However, the lack of equipment or gym membership shouldn't stop...

23 Sep 2025

Best Stretches to Improve Flexibility and Relax

Unlock Your Body's Potential: Essential Stretches for Flexibility and Relaxation. In today's fast-paced world, maintaining flexibility and finding moments of relaxation are more important than...

23 Sep 2025

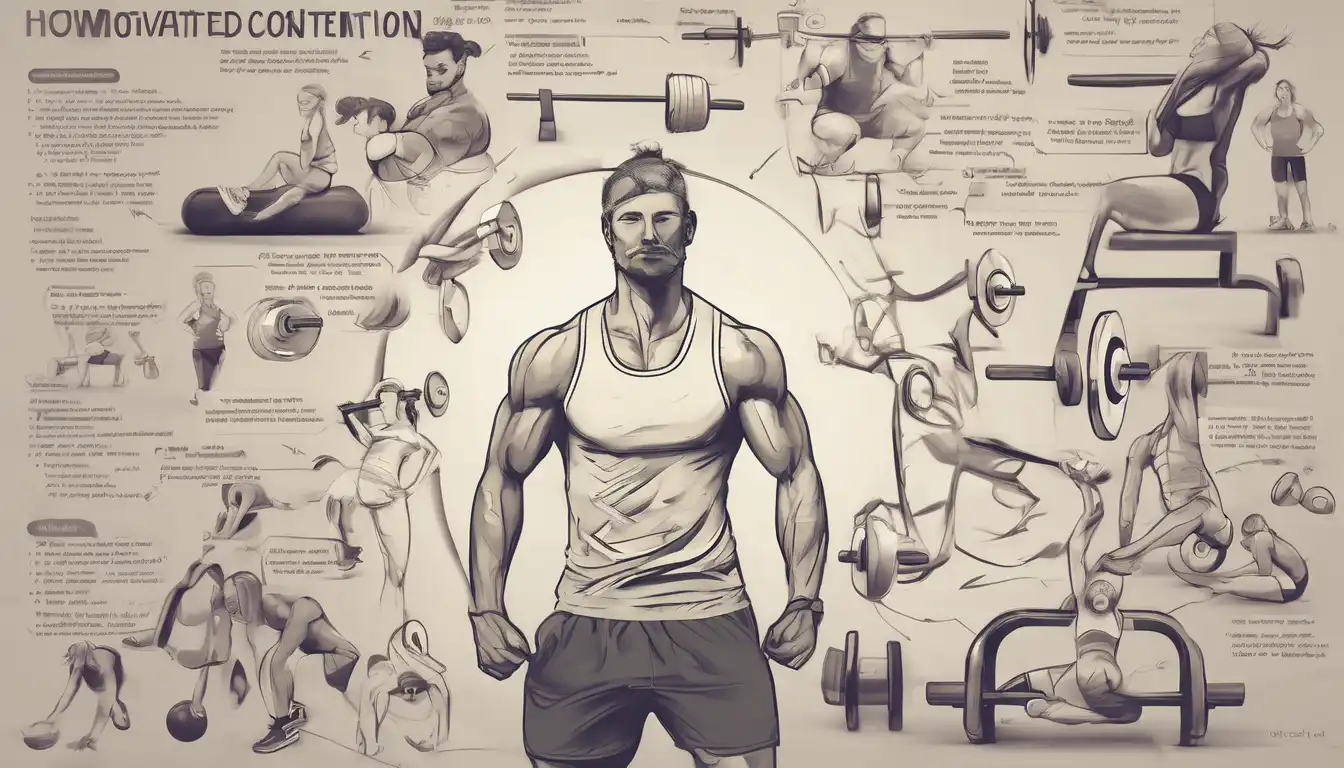

How to Stay Motivated and Consistent in Exercise

The Foundation of Sustainable Fitness Motivation. Maintaining motivation and consistency in exercise is one of the biggest challenges fitness enthusiasts face. Whether you're just starting your...

23 Sep 2025

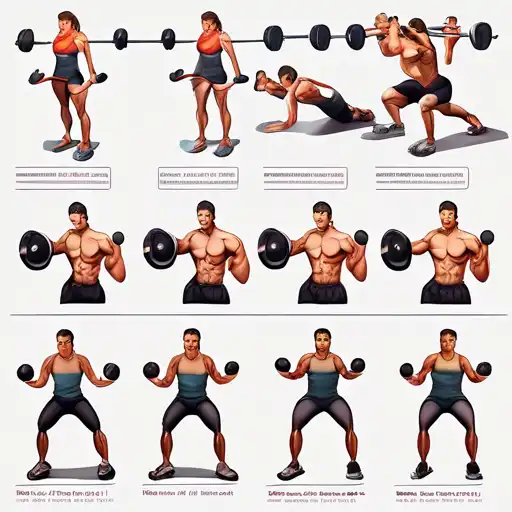

The Best Workout Routines for Beginners

Introduction to Beginner Workout Routines. Starting a fitness journey can be overwhelming for beginners. With so many options available, it's hard to know where to begin. This guide is designed to...

15 Jul 2025

Blogging

How to Start a Successful Blog Fast

Introduction to Fast-Track Blogging Success. Starting a blog can seem daunting, but with the right approach, you can launch a successful blog quickly. This guide will walk you through the...

17 Jul 2025

How to Start a Successful Blog Today

Introduction to Blogging Success. Starting a blog in 2024 might seem daunting, but with the right approach, it can be a rewarding venture. Whether you're looking to share your passion, establish...

15 Jul 2025

How to Start a Successful Blog Quickly

Introduction to Blogging Success. Starting a blog can seem daunting at first, but with the right approach, you can set up a successful blog quickly. This guide will walk you through the essential...

16 Jul 2025

Decor

Creative DIY Projects for Home Decor

Transform Your Home with These Creative DIY Projects. Looking to add a personal touch to your home decor? DIY projects are a fantastic way to infuse your space with personality and creativity. Not...

16 Jul 2025

Easy DIY Home Decor Ideas for All

Transform Your Space with These DIY Home Decor Ideas. Looking to refresh your home without breaking the bank? DIY home decor projects are a fantastic way to personalize your space while staying...

15 Jul 2025

Easy DIY Home Decor Ideas for Beginners

Simple and Creative DIY Home Decor Projects for Newbies. Embarking on DIY home decor projects can be a thrilling way to personalize your living space without breaking the bank. Whether you're a...

17 Jul 2025

Marketing

The Complete Guide to Digital Marketing

Introduction to Digital Marketing. In the ever-evolving digital landscape, mastering digital marketing is crucial for businesses aiming to thrive online. This guide will walk you through the essential strategies and tools needed to excel in digital marketing.. Understanding Digital Marketing. Digital marketing encompasses all marketing efforts that use an electronic device or the internet. Businesses leverage digital channels such as search engines, social media, email, and other websites to connect with current and prospective customers.. Key Components of Digital Marketing. Search Engine Optimization (SEO):. The practice of increasing the quantity and quality of traffic to your website... Read More

15 Jul 2025

Health

How to Improve Your Sleep Quality

Introduction to Better Sleep. Sleep is a cornerstone of good health, yet many struggle to achieve restful nights. Improving your sleep quality can enhance your mood, energy levels, and overall...

15 Jul 2025

The Science Behind Effective Weight Loss

Understanding the Fundamentals of Weight Loss. Weight loss is a journey that millions embark on every year, yet many find themselves struggling to achieve lasting results. The science behind...

17 Jul 2025

The Science Behind Better Sleep Habits

The Importance of Quality Sleep. Quality sleep is foundational to our overall health and well-being. It affects our mood, energy levels, and even our weight. Understanding the science behind...

16 Jul 2025

Biotechnology Breakthroughs That Are Saving Lives

Revolutionary Advances in Biotechnology Transforming Healthcare. In recent years, biotechnology has emerged as a beacon of hope, offering groundbreaking solutions to some of the most pressing...

18 Jul 2025

Technology

Cybersecurity Threats You Can't Afford to Ignore

Understanding the Landscape of Cybersecurity Threats. In today's digital age, cybersecurity threats have become more sophisticated and pervasive, posing significant risks to businesses of all...

22 Jul 2025

The Impact of 5G on Mobile Gaming

The Dawn of a New Era in Mobile Gaming with 5G. The advent of 5G technology is set to transform the mobile gaming landscape in unprecedented ways. With its ultra-low latency, high-speed...

19 Jul 2025

The Power of Predictive Analytics in Business

Understanding Predictive Analytics. Predictive analytics stands at the forefront of modern business strategies, offering unparalleled insights into future trends, customer behaviors, and potential...

22 Jul 2025

Virtual Reality: The Next Big Thing in Tech

Introduction to Virtual Reality. Virtual Reality (VR) is no longer just a figment of science fiction. It's here, and it's transforming the way we interact with digital content. From gaming to...

02 Aug 2025

Travel

The Best Travel Destinations for Adventure Lovers

Discover the World's Most Exciting Adventure Destinations. For those who crave excitement and yearn to explore the unknown, the world is full of breathtaking destinations that promise...

16 Jul 2025

Top 5 Travel Destinations for Adventure Lovers

5 Ultimate Adventure Spots for Thrill Seekers. For those who crave excitement and adrenaline, the world is full of incredible destinations that offer unforgettable adventures. From towering...

15 Jul 2025

The Best Travel Destinations for 2023

Discover the Best Travel Destinations for 2023. As the world opens up, 2023 promises to be a year filled with unforgettable travel experiences. Whether you're seeking serene beaches, bustling...

15 Jul 2025

Recent Post

Agile Methodology in Software Project Management

Introduction to Agile Methodology. Agile methodology has revolutionized the way software projects are managed and delivered. Unlike traditional waterfall approaches that follow a linear,... Read More

26 Sep 2025

Sustainable Computing: Eco-Friendly Hardware Solutions

Embracing Sustainable Computing Through Eco-Friendly Hardware. As digital transformation accelerates globally, the environmental impact of computing infrastructure has become increasingly... Read More

26 Sep 2025

The Evolution of Computer Processors Over Time

The Dawn of Computing: Early Processor Technologies. The evolution of computer processors represents one of the most remarkable technological journeys in human history. Beginning with primitive... Read More

26 Sep 2025

How to Choose the Right Computer Components

Introduction to Computer Component Selection. Building your own computer can be an incredibly rewarding experience, but choosing the right components is crucial for optimal performance and value.... Read More

26 Sep 2025

Building a High-Performance Gaming PC Guide

Introduction to High-Performance Gaming PC Construction. Building a high-performance gaming PC represents one of the most rewarding experiences for gaming enthusiasts. Unlike pre-built systems,... Read More

26 Sep 2025

Latest Innovations in Computer Hardware Technology

Revolutionary Advances in Computer Hardware Technology. The landscape of computer hardware technology is undergoing unprecedented transformation, with innovations emerging at an accelerated pace.... Read More

26 Sep 2025

Machine Learning vs Traditional Programming Approaches

Understanding the Fundamental Differences Between Machine Learning and Traditional Programming. In today's rapidly evolving technological landscape, the distinction between machine learning and... Read More

26 Sep 2025

How to Get Started with Machine Learning Projects

Introduction to Machine Learning Projects. Machine learning has transformed from an academic concept to a practical tool that businesses and individuals use daily. Whether you're a student,... Read More

25 Sep 2025

Practical Applications of Machine Learning in Healthcare

How Machine Learning is Revolutionizing Healthcare Delivery. Machine learning has emerged as a transformative force in healthcare, offering innovative solutions to longstanding challenges. From... Read More

25 Sep 2025

Breaking Down Artificial Intelligence for Beginners

What Exactly is Artificial Intelligence?. Artificial Intelligence, commonly referred to as AI, represents one of the most transformative technologies of our time. At its core, AI involves creating... Read More

25 Sep 2025

🔥 Popular Posts

- Networking Tools for Troubleshooting Connection Issues 17814 views

- Robotics: The Intersection of Technology and Innovation 14899 views

- Understanding the Psychology Behind Gaming Addiction 3001 views

- Machine Learning Algorithms Every Developer Should Know 2194 views

- Artificial Intelligence in Everyday Life: What's Next? 1958 views

- How to Start a Successful Blog Fast 1363 views

- How to Start a Successful Blog Quickly 1345 views

- How to Start a Successful Blog Today 1341 views

- Quantum Computing Applications You Didn't Know About 1113 views

- Biotechnology Breakthroughs That Are Saving Lives 883 views

- Gadgets That Make Your Home Smarter 653 views

- Internet of Things: Connecting the World 644 views

- The Security Challenges of IoT Devices 629 views

- How Smart Gadgets Are Changing Our Lives 617 views

- IoT in Healthcare: A Game Changer 610 views

- The Future of Hardware: What's Next? 604 views

- DevOps: Bridging the Gap Between Teams 603 views

- The Best Tech Gadgets for Productivity 601 views

- DevOps Culture: Collaboration and Efficiency 600 views

- Building a PC: A Beginner's Guide 597 views